"Evolving Reflections" is an interactive digital art installation designed for a gallery setting, where visitors can effortlessly engage in the creation of a dynamic live painting through their own movements. The installation invites users to experience how their bodily motions are translated into fluid, evolving visual art without the need for any direct input or interaction with controls.

As visitors approach the installation, their movements are captured by a camera and processed in real-time using advanced pose detection technology. The system interprets these movements and transforms them into brush strokes of varying sizes and colors that seamlessly blend and evolve on the digital canvas.

Process Overview

Progress update with steps that I took

Conceptualization and Research:

The foundation of "Evolving Reflections" began with a deep dive into the world of interactive digital art. I researched various approaches to creating immersive, user-driven experiences and explored different technologies that could translate physical movement into visual art. This research phase was crucial in identifying the right tools—Teachable Machine for pose detection and p5.js for real-time rendering—which would form the backbone of the project.

Prototype Development

With the core concept and technologies in place, I moved on to developing a basic prototype. This involved integrating the pose detection model with a simple rendering system that could visualize user movements as shapes on the screen. The initial focus was on ensuring that the system could reliably track and respond to movements in real-time. This prototype served as a proof of concept, demonstrating that the technology could effectively translate physical actions into digital art.

Visual Refinement and Algorithm Tuning

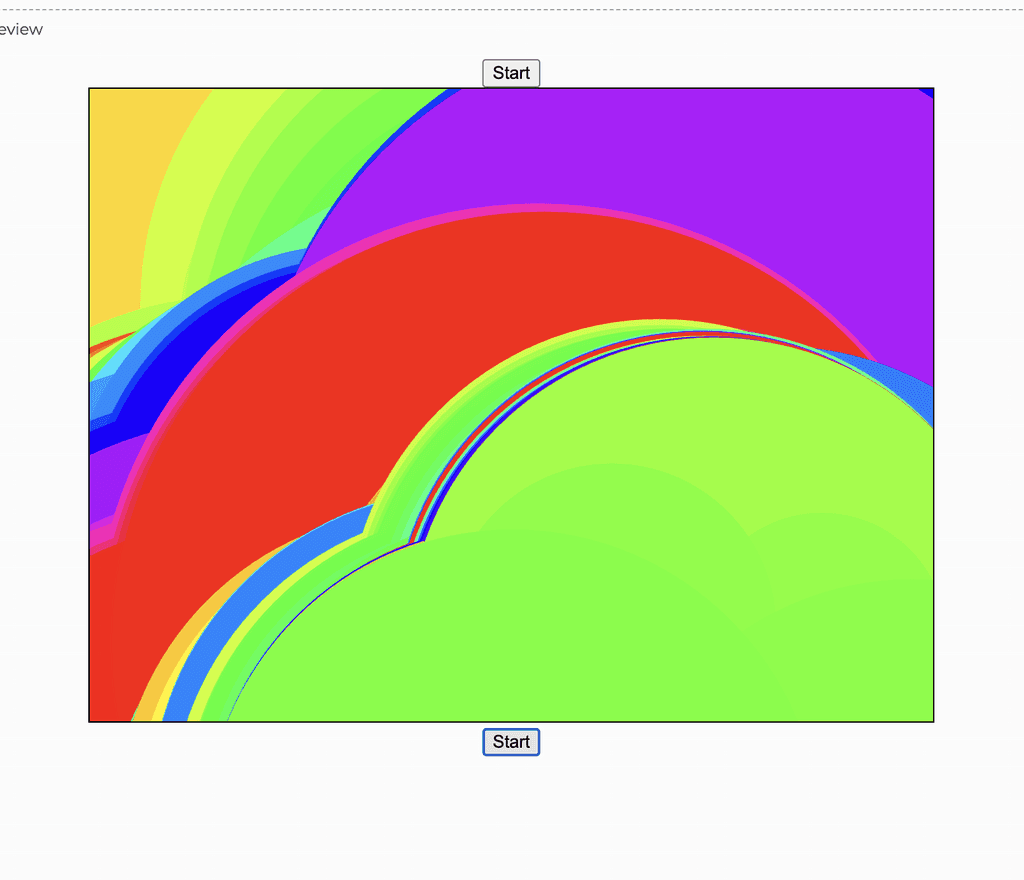

Once the basic functionality was established, the next step was refining the visual aspects of the installation. I experimented with color transitions, aiming for smooth and cohesive shifts across the color spectrum. The brush stroke effect was enhanced to mimic natural, fluid movements, creating a more organic and visually appealing experience. This phase involved fine-tuning algorithms to ensure that colors faded seamlessly into one another and that brush sizes adapted dynamically to the speed and nature of user movements.

User Experience Design and Final Optimization:

The final phase of the project focused on optimizing the installation for a gallery environment, where ease of interaction and accessibility are paramount. I streamlined the user interface by removing any input controls, allowing the system to operate autonomously. The canvas was programmed to reset every 30 seconds, ensuring a continuous flow of new art for each user. This stage also included rigorous testing and adjustments to ensure the installation was both reliable and engaging, offering a smooth, immersive experience for all who interact with it.